The Illinois method for solving equations

Such a long time since a last post! Well, that's academic life for you ...

If you look at pretty much any modern textbook on numerical methods, of which there are many, you'll find that the following methods will be given for the solution of a single non-linear equation \(f(x)=0\):

- direct iteration, also known as fixed-point iteration

- bisection method

- method of false position, also known as regula falsi

- secant method

- Newton's method, also known as the Newton-Raphson method

Occasionally a text might specify, or mention, one or two more, but these five seem to be the "classic" methods. All of the above have their advantages and disadvantages:

- direct iteration is easy, but can't be guaranteed to converge, nor converge to a particular solution

- bisection is guaranteed to work (assuming the function \(f(x)\) is continuous in a neighbourhood of the solution), but is very slow

- method of false position is supposed to improve on bisection, but has its own problems, including also slow convergence

- the secant method is quite fast (order of convergence is about 1.6) but is not guaranteed to converge always

- Newton's method is fast (quadratic convergence), theoretically very straightforward, but does require the computation of the derivative \(f'(x)\) and is not guaranteed to converge.

Methods such as the Brent-Dekker-van Wijngaarden method - also known as Brent's method, Ridder's method, both of which may be considered as a sort of amalgam of bisection and inverse quadratic interpolation, are generally not covered in introductory texts, although some of the newer methods are both simple and guaranteed to converge quickly. All these methods have the advantage of not requiring the computation of the derivative.

This blog post is about a variation of the method of false position, which is amazingly simple, and yet extremely fast.

The Illinois method

This method seems to go back to 1953 when it was published in an internal memo at the University of Illinois Computer Laboratory by J. N. Snyder, "Inverse interpolation, a real root of $f(x)=0", University of Illinois Digital Computer Laboratory, ILLIAC I Library Routine H1-71 4

Since then it seems to have been called the "Illinois method" by almost everybody, although a few writers are now trying to name it "Snyder's method".

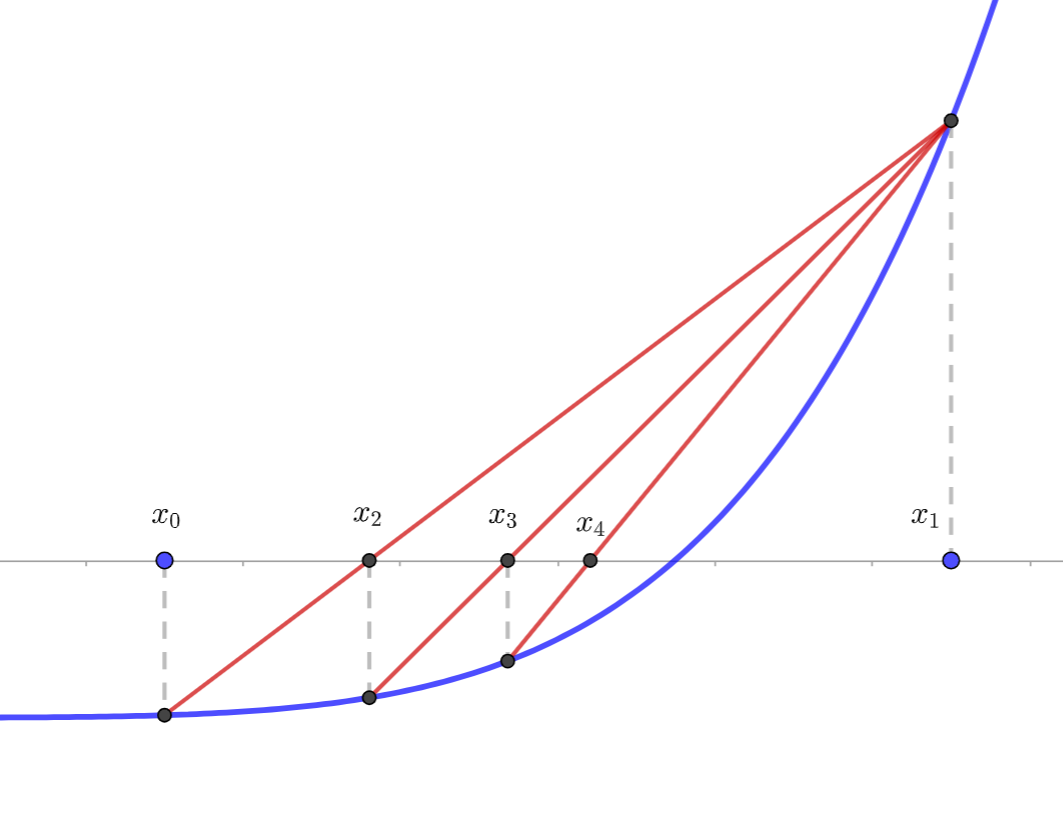

To start, note a well known problem with false position: if the function is concave (or convex) in a neighbourhood of the root including the bracketing interval, then the values will converge from one side only:

This slows down convergence. Snyder's insight was that if this behaviour started: that is, if there were two consecutive iterations \(x_i\), \(x_{i+1}\) on one side of the root, then for the next iteration the secant would be computed not with the function value \(f(x_n)\) on the other side of the root, but half that function value \(f(x_n)/2\).

The algorithm for making this work has been described thus by M. Dowell and P. Jarratt ("A modified regula falsi method for computing the root of an equation", BIT 11, 1971, pp168 - 174.) At each stage we keep track of the \(x\) values \(x_i\) and the corresponding function values \(f_i=f(x_i)\). As usual \(x_{i-1}\) and \(x_i\) bracket the root:

Start by performing the usual secant operation

\[ x_{i+1}=x_i-f(x_i)\frac{x_i-x_{i-1}}{f(x_i)-f(x_{i-1})}=\frac{x_{i-1}f(x_i)-x_if(x_{i-1})}{f(x_i)-f(x_{i-1})} \] and set \(f_{i+1}=f(x_{i+1})\). Then:

- if \(f_if_{i+1}<0\), replace \((x_{i-1},f_{i-1})\) with \((x_i,f_i)\)

- if \(f_if_{i+1}>0\), replace \((x_{i-1},f_{i-1})\) with \((x_{i-1},f_{i-1}/2)\)

In each case we replace \((x_i,f_i)\) by \((x_{i+1},f_{i+1})\).

Before we show how fast this can be, here's a function to perform false position, in Julia:

function false_pos(f,a,b;niter = 20)

if f(a)*f(b)>0

error("Values are not guaranteed to bracket a root")

else

for k in 1:niter

c = b - f(b)*(b-a)/(f(b)-f(a))

if f(b)*f(c)<0

a = b

end

b = c

@printf("%2d: %.15f, %.15f, %.15f\n",k,a,b,abs(a-b))

end

end

end

Note that we have adopted the Dowell-Jarratt logic, so that if \(f_if_{i+1}>0\), then we do nothing. And here \(a\), \(b\), \(c\) correspond to \(x_{i-1}\), \(x_i\), and \(x_{i+1}\).

This function, as you see, doesn't so much return a value, but simply prints out the current bracketing values, along with their difference. Here's an example:

Julia> f(x) = x^5 - 2

Julia> false_pos(f,0.5,1.5)

1: 1.500000000000000, 0.760330578512397, 0.739669421487603

2: 1.500000000000000, 0.936277160385007, 0.563722839614993

3: 1.500000000000000, 1.041285513445667, 0.458714486554333

4: 1.500000000000000, 1.097156710176020, 0.402843289823980

5: 1.500000000000000, 1.124679454971997, 0.375320545028003

6: 1.500000000000000, 1.137668857062543, 0.362331142937457

7: 1.500000000000000, 1.143668984638562, 0.356331015361438

8: 1.500000000000000, 1.146412444361109, 0.353587555638891

9: 1.500000000000000, 1.147660927013766, 0.352339072986234

10: 1.500000000000000, 1.148227852400553, 0.351772147599447

11: 1.500000000000000, 1.148485034668113, 0.351514965331887

12: 1.500000000000000, 1.148601651598731, 0.351398348401269

13: 1.500000000000000, 1.148654519726769, 0.351345480273231

14: 1.500000000000000, 1.148678485212590, 0.351321514787410

15: 1.500000000000000, 1.148689348478166, 0.351310651521834

16: 1.500000000000000, 1.148694272572126, 0.351305727427874

17: 1.500000000000000, 1.148696504543074, 0.351303495456926

18: 1.500000000000000, 1.148697516236793, 0.351302483763207

19: 1.500000000000000, 1.148697974810134, 0.351302025189866

20: 1.500000000000000, 1.148698182668834, 0.351301817331166

In fact, because of the problem we showed earlier, which this example exemplifies, the distance between bracketing values converges to a non-zero value (hence is more-or-less irrelevant as a measure of convergence), and it's the values in the second column which converge to the root.

Since \(2^{1/5}=1.148698354997035\), we have got only 6 decimal places at 20 iterations, which is no faster than bisection.

Here's the Illinois method. Note that it is very similar to the above false position function, except that we are also keeping track of function values:

function illinois(f,a,b;num_iter = 20)

if f(a)*f(b) > 0

error("Values given are not guaranteed to bracket a root")

else

fa, fb = f(a), f(b)

for k in 1:num_iter

c = b - fb*(b-a)/(fb-fa)

fc = f(c)

if fb*fc < 0

a, fa = b, fb

else

fa = fa/2

end

b, fb = c, fc

@printf("%2d: %.15f, %.15f, %.15f\n",k,a,b,abs(a-b))

end

end

end

And with the same function and initial bracketing values as before:

julia> illinois(f,0.5,1.5)

1: 1.500000000000000, 0.760330578512397, 0.739669421487603

2: 1.500000000000000, 0.936277160385007, 0.563722839614993

3: 1.500000000000000, 1.113315730198992, 0.386684269801008

4: 1.113315730198992, 1.179659804462764, 0.066344074263773

5: 1.179659804462764, 1.146786019205345, 0.032873785257419

6: 1.179659804462764, 1.148597847114352, 0.031061957348412

7: 1.148597847114352, 1.148787731780184, 0.000189884665832

8: 1.148787731780184, 1.148698339356448, 0.000089392423736

9: 1.148787731780184, 1.148698354994601, 0.000089376785583

10: 1.148698354994601, 1.148698354999468, 0.000000000004867

11: 1.148698354994601, 1.148698354997035, 0.000000000002434

12: 1.148698354994601, 1.148698354997035, 0.000000000002434

13: 1.148698354997035, 1.148698354997035, 0.000000000000000

14: 1.148698354997035, 1.148698354997035, 0.000000000000000

15: 1.148698354997035, 1.148698354997035, 0.000000000000000

16: 1.148698354997035, 1.148698354997035, 0.000000000000000

17: 1.148698354997035, 1.148698354997035, 0.000000000000000

18: 1.148698354997035, 1.148698354997035, 0.000000000000000

19: 1.148698354997035, 1.148698354997035, 0.000000000000000

20: 1.148698354997035, 1.148698354997035, 0.000000000000000

Here the bracketing values do indeed get close together so that their difference converges to zero (as you'd expect), and also by 13 iterations we have obtained 15 decimal place accuracy. Dowell and Jarratt show that this method has an efficiency index \(E = 3^{1/3}\approx 1.442\); here \(E=p/C\) where \(p\) is the order of convergence and \(C\) is the "cost" per iteration (measured in terms of arithmetic operations). As we see in the example below, the number of correct significant figures roughly triples every three iterations.

A more useful output can be obtained by slightly adjusting the above function so

that it prints out the most recent values, and their successive absolute

differences. Even better, we can use BigFloat to see a few more digits:

Julia> setprecision(200)

Julia> illinois(f,BigFloat("0.5"),BigFloat("1.5"))

1: 0.760330578512396694214876033057851239669421487603305785123967, 7.396694214876033e-01

2: 0.936277160385007078769511771881822637553598228912035496092550, 1.759465818726104e-01

3: 1.113315730198991546263163399473256828664229343275663554233298, 1.770385698139845e-01

4: 1.179659804462764330162645293397126720145901264006900448944524, 6.634407426377278e-02

5: 1.146786019205344856070782528108967774498747386650573905592277, 3.287378525741947e-02

6: 1.148597847114352112643459413647963815311973435038986609382286, 1.811827909007257e-03

7: 1.148787731780183703868308039549154052483519742061776232119911, 1.898846658315912e-04

8: 1.148698339356448159061880000968862518745749828816440530467845, 8.939242373554481e-05

9: 1.148698354994601301571564951679026859974778940076712488922696, 1.563815314250968e-08

10: 1.148698354999467954514826379562077090994393405782639396087335, 4.866652943261428e-12

11: 1.148698354997035006798616637583092713250045478960431687721718, 2.432947716209742e-12

12: 1.148698354997035006798626946777927545774018995974486715443598, 1.030919483483252e-23

13: 1.148698354997035006798626946777927633113682781851136780227430, 8.733966378587665e-35

14: 1.148698354997035006798626946777927589443850889097797505513711, 4.366983189275334e-35

15: 1.148698354997035006798626946777927589443850889097797505513711, 0.000000000000000e+00

16: 1.148698354997035006798626946777927589443850889097797505513712, 1.244603055572228e-60

17: 1.148698354997035006798626946777927589443850889097797505513711, 1.244603055572228e-60

18: 1.148698354997035006798626946777927589443850889097797505513711, 0.000000000000000e+00

19: 1.148698354997035006798626946777927589443850889097797505513712, 1.244603055572228e-60

20: 1.148698354997035006798626946777927589443850889097797505513711, 1.244603055572228e-60

The speed of reaching 60 decimal place accuracy is very much in keeping with the order of convergence being about 1.4. Alternatively, we'd expect the number of correct significant figures to roughly triple each three iterations.

The Illinois method is disarmingly simple, produces excellent results, and since it's a bracketing method, will be guaranteed to converge. What's not to like? Time to get it back in the textbooks!