Post-election swings

So the Australian federal election of 2022 is over as far as the public is concerned; all votes have been cast and now it's a matter of waiting while the Australian Electoral Commission tallies the numbers, sorts all the preferences, and arrives at a result. Because of the complications of the voting system, and of all the checks and balances within it, a final complete result may not be known for some days or even weeks. What is known, though, is that the sitting government has been ousted, and that the Australian Labor Party (ALP) will lead the new government. Whether the ALP amasses enough wins for it to govern with a complete majority is not known; they may have a "minority government" in coalition with either independent candidates or the Greens.

In Australia, there are 151 federal electorates or "Divisions"; each one corresponds to seat in the House of Representatives; the Lower House of the Federal government. The winner of an election is whichever party or coalition wins the majority of seats; the Prime Minister is simply the leader of the major party in that coalition. Australians thus have no say whatsoever in the Prime Minister; that is entirely a party matter, which is why Prime Ministers have sometimes been replaced in the middle of a term.

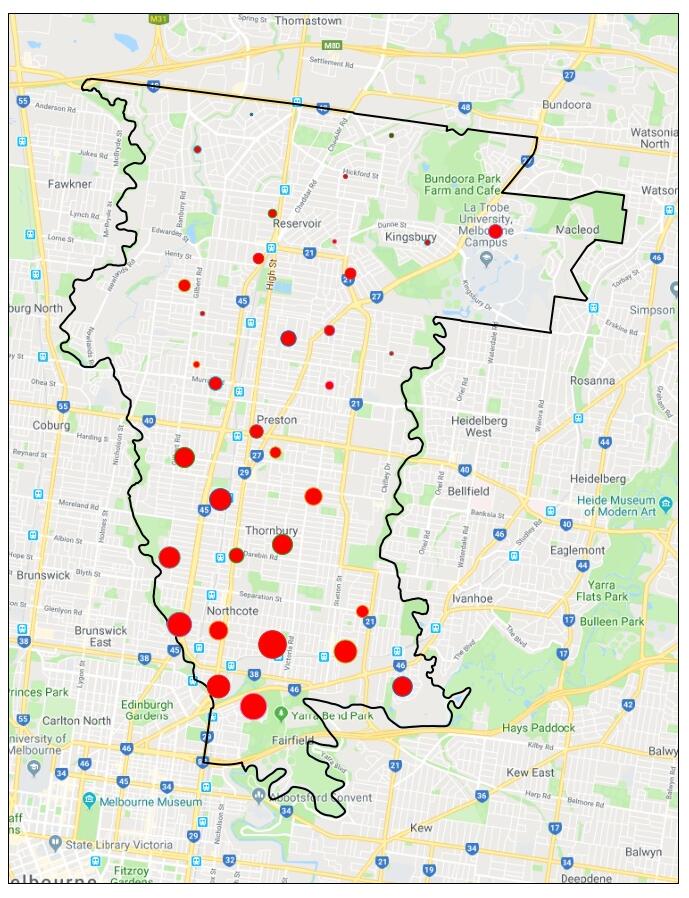

My concern is the neighbouring electorates of Cooper and Wills. Both are very similar both geographically and politically; both are Labor strongholds, in each of which the Greens have made considerable inroads. Indeed Cooper (called Batman until a few years ago) used to be one of the safest Labor seats in the country; it has now become far less so, and in each election the battle is now between Labor and the Greens. Both are urban seats, in Melbourne; in each of them the southern portion is more gentrified, diverse, and left-leaning, and the Northern part is more solidly working-class, and Labor-leaning. In each of them the dividing line is [Bell St](https://en.wikipedia.org/wiki/State_(Bell/Springvale)_Highway), known as the "tofu curtain". (Also as the "Latte Line" or the "Hipster-Proof Fence".)

Thus Greens campaigning consists of letting the southerners know they haven't been forgotten, and attempting to reach out to the northerners. This is mainly done with door-knocking by volunteers, and there is never enough time, or enough volunteers, to reach every household.

Anyway, here are some maps showing the Greens/Labor result at each polling booth. The size of the circle represents the ratio of votes: red for a Labor majority; green for a Greens majority. And the popup tooltip gives the name of the polling booth, and the swing either to Labor or to the Greens.

I couldn't decide the best way of displaying the swings, so in the end I just displayed the swing to Labor in all booths with a Labor majority, even if that swing was sometimes negative. And similarly for the Greens. Note that a large swing may correspond to a relatively small number of votes being cast.

If you get the shape into a state from which you can't get the stair affect working, click on the little circular arrows in the bottom left, which will reset the object to its initial orientation.

Impossible box

This was just a matter of creating the edges, finding a nice view, and using some trial and error to slice through the front most beams so that it seemed that hhe read beams were at the front.

Here is another widget, again you may have to wait for it to load.

Voting power (4): Speeding up the computation

Introduction and recapitulation

Recall from previous posts that we have considered two power indices for computing the power of a voter in a weighted system; that is, the ability of a voter to influence the outcome of a vote. Such systems occur when the voting body is made up of a number of "blocs": these may be political parties, countries, states, or any other groupings of people, and it is assumed that within every bloc all members will vote the same way. Indeed, in some legislatures, voting according to "the party line" is a requirement of membership.

Examples include the American Electoral College, in which the "voters" are the states; the Australian Senate, the European Union, the International Monetary Fund, and many others.

Given a set \(N=\{1,2,\ldots,n\}\) of voters and their weights \(w_i\), and a quota \(q\) required to pass any motion, we have represented this as

\[ [q;w_1,w_2,\ldots,w_n] \]

and we define a winning coalition as any subset \(S\subset N\) of voters for which

\[ \sum_{i\in S}w_i\ge q. \]

It is convenient to define a characteristic function \(v\) on all subsets of \(N\) so that \(v(S)=1\) if \(S\) is a winning coalition, and 0 otherwise. Given a winning coalition \(S\), a voter \(i\in S\) is necessary if \(v(S)-v(S-\{i\})=1\).

For any voter \(i\), the number of winning coalitions for which that voter is necessary is

\[ \beta_i = \sum_S\left(v(S)-v(S-\{i\})\right) \]

where the sum is taken over all winning coalitions. Then the Banzhaf power index is this value normalized so that the sum of all indices is unity:

\[ B_i=\frac{\beta_i}{\sum_{i=1}^n \beta_i}. \]

The Shapley-Shubik power index is defined by considering all permutations \(p\) of \(N\). Taken cumulative sums from the left, a voter \(p_k\) is pivotal if this is the first voter for which te cumulative sum is at least \(q\). For each voter \(i\), let \(\sigma_i\) be the number of permutations for which \(i\) is pivotal. Then

\[ S_i=\frac{1}{n!}\sigma_i \]

which ensures that the sum of all indices is unity.

Although these two indices seem very different, there is in fact a deep connection. Consider any permutation \(p\) and suppose that \(i=p_k\) is the pivotal voter, This voter will also be pivotal in all permutations for which \(i=p_k\) and the values to the right and left of \(i\) stay there: there will be \((k-1)!(n-k)!\) such permutations. However, we can consider the values up to and including \(i=p_k\) as a winning coalition for which \(i\) is necessary, which means we can write

\[ S_i=\sum_S\frac{(n-k)!(k-1)!}{n!}\left(v(S)-v(S-\{i\})\right) \]

which can be compared to the Banzhaf index above as being similar and with a different weighting function. Note that the above expression can be written as

\[ S_i=\sum_S\left(k{\dbinom n k}\right)^{-1}\left(v(S)-v(S-\{i\})\right) \]

which uses smaller numbers. For example, if \(n=50\) then \(n!\approx 3.0149\times 10^{64}\) but the largest binomial value is only approximately \(1.264\times 10^{14}\).

Computing with polynomials

We have also seen that if we define

\[ f_i(x) = \prod_{m\ne i}(1+x^{w_m}) \]

then

\[ \beta_i = \sum_{j=q-w_i}^{q-1}a_j \]

where \(a_j\) is the coefficient of \(x_j\) in \(f_i(x)\).

Similarly, if

\[ f_i(x,y) = \prod_{m\ne i}(1+yx^{w_m}) \]

then

\[ S_i=\sum_{k=0}^{n-1}\frac{k!(n-1-k)!}{n!}\sum_{j=q-w_i}^{q-1}c_{jk} \]

where \(c_{jk}\) is the coefficient of \(x^jy^k\) in the expansion of \(f_i(x,y)\).

In a previous post we have shown how to implement these in Python, using the Sympy library. However, Python can be slow, and using Cython is not trivial. We thus here show how to use Julia and its Polynomials package.

Using Julia for speed

The Banzhaf power indices can be computed almost directly from the definition:

using Polynomials

function px(n)

return(Polynomial([0,1])^n)

end

function banzhaf(q,w)

n = length(w)

inds = vec(zeros(Int64,1,n))

for i in 1:n

p = Polynomial([1])

for j in 1:i-1

p = p * px(v[j])

end

for j in i+1:n

p = p*px(v[j])

end

inds[i] = sum(coeffs(p)[k] for k in q-w[i]+1:q)

end

return(inds)

end

This will actually return the vector of \(\beta_i\) values which can then be easily normalized.

The function px is a "helper function" that simply returns the polynomial \(x^n\).

For the Shapley-Shubik indices, the situation is a bit trickier. There are indeed some Julia libraries for multivariate polynomials, but they seem (at the time of writing) to be not fully functional. However, consider the polynomials

\[ f_i(x,y)=\prod_{m\ne i}(1+yx^{w_m}) \]

from above. We can consider this as a polynomial of degree \(n-1\) in \(y\), whose coefficients are polynomials in \(x\). So if

\[ f_i(x,y) = 1 + p_1(x)y + p_2(x)y^2 +\cdots + p_{n-1}(x)y^{n-1} \]

then \(f_i(x,y)\) can be represented as a vector of polynomials

\[ [1,p_1(x),p_2(x),\ldots,p_{n-1}(x)]. \]

With this representation, we need to perform a multiplication by \(1+yx^p\) and also determine coefficients.

Multiplication is easy, noting at once that \(1+yx^p\) is linear in \(y\), and so we use the expansion of the product

\[ (1+ay)(1 + b_1y + b_2y^2 + \cdots + b_{n-1}y^{n-1}) \]

to

\[ 1 + (a+b_1)y + (ab_1+b_2)y^2 + \cdots + (ab_{n-2}+b_{n-1})y^{n-1} + ab_{n-1}y^n. \]

This can be readily programmed as:

function mulp1(n,p)

p0 = Polynonial(0)

px = Polynomial([0,1])

c1 = cat(p,p0,dims=1)

c2 = cat([p0,p,dims=1)

return(c1 + px^n .* c2)

end

The first two lines aren't really necessary, but they do make the procedure easier to read.

And we need a little program for extracting coefficients, with a result of zero

if the power is greater than the degree of the polynomial (Julia's coeff

function simply produces a list of all the coefficients in the polynomial.)

function one_coeff(p,n)

d = degree(p)

pc = coeffs(p)

if n <= d

return(pc[n+1])

else

return(0)

end

end

Now we can put all of this together in a function very similar to the Python function for computing the Shapley-Shubik indices with polynomials:

function shapley(q,w)

n = length(w)

inds = vec(zeros(Float64,1,n))

for i in 1:n

p = Polynomial(1)

for j in 1:i-1

p = mulp1(w[j],p)

end

for j in i+1:n

p = mulp1(w[j],p)

end

B = vec(zeros(Float64,1,n))

for j in 1:n

B[j] = sum(one_coeff(p[j],k) for k in q-w[i]:q-1)

end

inds[i] = sum(B[j+1]/binomial(n,j)/(n-j) for j in 0:n-1)

end

return(inds)

end

And a quick test (with timing) of the powers of the states in the Electoral

College; here ecv is the number of electors of all the states, in

alphabetical order (the first states are Alabama, Alaska, Arizona, and the last

states are West Virginia, Wisconsin, Washington):

ecv = [9, 3, 11, 6, 55, 9, 7, 3, 3, 29, 16, 4, 4, 20, 11, 6, 6, 8, 8, 4, 10, 11, 16, 10, 6,

10, 3, 5, 6, 4, 14, 5, 29, 15, 3, 18, 7, 7, 20, 4, 9, 3, 11, 38, 6, 3, 13, 12, 5, 10, 3]

@time(s = shapley(270,ecv));

0.722626 seconds (605.50 k allocations: 713.619 MiB, 7.95% gc time)

This is running on a Lenovo X1 Carbon, 3rd generation, using Julia 1.5.3. The operating system is a very recently upgraded version of Arch Linux, and currently using kernel 5.10.3.

Voting power (3): The American swing states

As we all know, American Presidential elections are done with a two-stage process: first the public votes, and then the Electoral College votes. It is the Electoral College that actually votes for the President; but they vote (in their respective states) in accordance with the plurality determined by the public vote. This unusual system was devised by the Founding Fathers as a compromise between mob rule and autocracy, of which both they were determined to guard against. The Electoral College is not now an independent body: in all states but two all electoral college votes are given to the winner in that state. This means that the Electoral College may "amplify" the public vote; or it may return a vote which differs from the public vote, in that a candidate may receive a majority of public votes, and yet still lose the Electoral College vote. This means that there are periodic calls for the Electoral College to be disbanded, but in reality that seems unlikely. And in fact as far back as 1834 the then President, Andrew Jackson, was demanding its disbanding: a President, according to Jackson, should be a "man of the people" and hence elected by the people, rather than by an elite "College". This is one of the few instances where Jackson didn't get his way.

The initial idea of the Electoral College was that voters in their respective states would vote for Electors who would best represent their interests in a Presidential vote: these Electors were supposed to be wise and understanding men who could be relied on to vote in a principled manner. Article ii, Section 1 of the USA Constitution describes how this was to be done. When it became clear that electors were not in fact acting impartially, but only at the behest of the voters, some of the Founding Fathers were horrified. And like so many political institutions the world over, the Electoral College does not now live up to its original expectations, but is also too entrenched in the political process to be removed.

The purpose of this post is to determine the voting power of the "swing states", in which most of a Presidential campaign is conducted. It has been estimated that something like 75% of Americans are ignored in a campaign; this might be true, but that's just plain politics. For example California (with 55 Electoral College cotes) is so likely to return a Democrat candidate that it may be considered a "safe state" (at least, for the Democrats); it would be a waste of time for a candidate to spend too much time there. Instead, a candidate should stump in Florida, for example, which is considered a swing state, and may go either way: we have seen how close votes in Florida can be.

For discussion about measuring voting power using power indices check out the previous two blog posts.

The American Electoral College

According to the excellent site 270 to win and their very useful election histories, we can determine which states have voted "the same" for any election post 1964. Taking 2000 as a reasonable starting point, we have the following results. Some states have voted the same in every election from 2000 onwards; others have not.

| Safe Democrat | Safe Republican | Swing | |||

|---|---|---|---|---|---|

| California | 55 | Alabama | 9 | Colorado | 9 |

| Connecticut | 7 | Alaska | 3 | Florida | 29 |

| Delaware | 3 | Arizona | 11 | Idaho | 4 |

| DC | 3 | Arkansas | 6 | Indiana | 11 |

| Hawaii | 4 | Georgia | 16 | Iowa | 6 |

| Illinois | 20 | Kansas | 6 | Michigan | 16 |

| Maine | 3 | Kentucky | 8 | Nevada | 6 |

| Maryland | 10 | Louisiana | 8 | New Hampshire | 4 |

| Massachusetts | 11 | Mississippi | 6 | New Mexico | 5 |

| Minnesota | 10 | Missouri | 10 | North Carolina | 15 |

| New Jersey | 14 | Montana | 3 | Ohio | 18 |

| New York | 29 | Nebraska | 4 | Pennsylvania | 20 |

| Oregon | 7 | North Dakota | 3 | Virginia | 13 |

| Rhode Island | 4 | Oklahoma | 7 | Wisconsin | 10 |

| Vermont | 3 | South Carolina | 9 | Maine CD 2 | 1 |

| Washington | 12 | South Dakota | 3 | Nebraska CD 2 | 1 |

| Tennessee | 11 | ||||

| Texas | 38 | ||||

| Utah | 6 | ||||

| West Virginia | 5 | ||||

| Wyoming | 3 | ||||

| 195 | 175 | 168 |

From the table, we see that since 2000, we can count on 195 "safe" Electoral College votes for the Democrats, and 175 "safe" Electoral College votes for the Republicans. Thus of the 168 undecided votes, for a Democrat win the party must obtain at least 75 votes, and for a Republican win, the party needs to amass 95 votes.

Note that according to the site, of the votes in Maine and Nebraska, all but one are considered safe - remember that these are the only two states to apportion votes by Congressional district. Of Maine's 4 Electoral College votes, 3 are safe Democrat and one is a swing vote; for Nebraska, 4 of its votes are safe Republican, and 1 is a swing vote.

All this means is that a Democrat candidate should be campaigning considering the power given by

\[ [75; 9,29,4,11,6,16,6,4,5,15,18,20,13,10,1,1] \]

and a Republican candidate will be working with

\[ [95; 9,29,4,11,6,16,6,4,5,15,18,20,13,10,1,1] \]

A Democrat campaign

So let's imagine a Democrat candidate who wishes to maximize the efforts of the campaign by concentrating more on states with the greatest power to influence the election.

In [1]: q = 75; w = [9,29,4,11,6,16,6,4,5,15,18,20,13,10,1,1]

In [2]: b = banzhaf(q,w); bn = [sy.Float(x/sum(b)) for x in b]; [sy.N(x,4) for x

in bn]

Out[2]: [0.05192, 0.1867, 0.02271, 0.06426, 0.03478, 0.09467, 0.03478, 0.02271,

0.02870, 0.08801, 0.1060, 0.1196, 0.07515, 0.05800, 0.005994, 0.005994]

In [3]: s = shapley(q,w); [sy.N(x/sum(s),4) for x in s]

out[3]: [0.05102, 0.1902, 0.02188, 0.06375, 0.03367, 0.09531, 0.03367, 0.02188,

0.02770, 0.08833, 0.1073, 0.1217, 0.07506, 0.05723, 0.005662, 0.005662]

The values are not the same, but they are in fact quite close, and in this case they are comparable to the numbers of Electoral votes in each state. To compare values, it will be most efficient to set up a Dataframe using Python's data analysis library pandas. We shall also convert the Banzhaf and Shapley-Shubik values from sympy floats int ordinary python floats.

In [4]: import pandas as pd

In [5]: bf = [float(x) for x in bn]

In [6]: sf = [float(x) for x in s]

In [5]: d = {"States":states, "EC Votes":ec_votes, "Banzhaf indices":bf, "Shapley-Shubik indices:sf}

In [6]: swings = pd.DataFrame(d)

In [7]: swings.sort_values(by = "EC Votes", ascending = False)

In [8]: ssings.sort_values(by = "Banzhaf indices", ascending = False)

In [9]: swings.sort_values(by = "Shapley-Shubik indices", ascending = False)

We won't show the results of the last three expressions, but they all give rise to the same ordering.

We can still get some information by not looking so much at the values of the power indices, but their relative values to the number of Electoral votes. To do this we need a new column which normalizes the Electoral votes so that their sum is unity:

In [10]: swings["Normalized EC Votes"] = swings["EC Votes"]/168.0

In [11]: swings["Ratio B to N"] = swings["Banzhaf indices"]/swings["Normalized EC Votes"]

In [12]: swings["Ratio S to N"] = swings_d["Shapley-Shubik indices"]/swings["Normalized EC Votes"]

In [13]: swings.sort_values(by = "EC Votes", ascending = False)

The following table shows the result.

| States | EC Votes | Banzhaf indices | Shapley-Shubik indices | EC Votes Normalized | Ratio B to N | Ratio S to N |

|---|---|---|---|---|---|---|

| Florida | 29 | 0.186702 | 0.190164 | 0.172619 | 1.081585 | 1.101640 |

| Pennsylvania | 20 | 0.119575 | 0.121732 | 0.119048 | 1.004430 | 1.022552 |

| Ohio | 18 | 0.106034 | 0.107289 | 0.107143 | 0.989655 | 1.001360 |

| Michigan | 16 | 0.094671 | 0.095309 | 0.095238 | 0.994049 | 1.000743 |

| North Carolina | 15 | 0.088014 | 0.088330 | 0.089286 | 0.985754 | 0.989293 |

| Virginia | 13 | 0.075149 | 0.075057 | 0.077381 | 0.971155 | 0.969966 |

| Indiana | 11 | 0.064261 | 0.063752 | 0.065476 | 0.981447 | 0.973660 |

| Wisconsin | 10 | 0.058004 | 0.057227 | 0.059524 | 0.974471 | 0.961422 |

| Colorado | 9 | 0.051922 | 0.051017 | 0.053571 | 0.969215 | 0.952318 |

| Iowa | 6 | 0.034777 | 0.033670 | 0.035714 | 0.973770 | 0.942774 |

| Nevada | 6 | 0.034777 | 0.033670 | 0.035714 | 0.973770 | 0.942774 |

| New Mexico | 5 | 0.028695 | 0.027704 | 0.029762 | 0.964169 | 0.930862 |

| Idaho | 4 | 0.022714 | 0.021877 | 0.023810 | 0.953972 | 0.918823 |

| New Hampshire | 4 | 0.022714 | 0.021877 | 0.023810 | 0.953972 | 0.918823 |

| Maine CD 2 | 1 | 0.005994 | 0.005662 | 0.005952 | 1.007058 | 0.951282 |

| Nebraska CD 2 | 1 | 0.005994 | 0.005662 | 0.005952 | 1.007058 | 0.951282 |

We can thus infer that a Democrat candidate should indeed campaign most vigorously in the states with the largest number of Electoral votes. This might seem to be obvious, but as we have shown in previous posts, there is not always a correlation between voting weight and voting power, and that a voter with a low weight might end up having considerable power.

A Republican candidate

Going through all of the above, but with a quota of 95, produces in the end the following:

| States | EC Votes | Banzhaf indices | Shapley-Shubik indices | EC Votes Normalized | Ratio B to N | Ratio S to N |

|---|---|---|---|---|---|---|

| Florida | 29 | 0.186024 | 0.190086 | 0.172619 | 1.077658 | 1.101190 |

| Pennsylvania | 20 | 0.119789 | 0.121871 | 0.119048 | 1.006230 | 1.023718 |

| Ohio | 18 | 0.106258 | 0.107258 | 0.107143 | 0.991741 | 1.001075 |

| Michigan | 16 | 0.094453 | 0.095156 | 0.095238 | 0.991756 | 0.999140 |

| North Carolina | 15 | 0.088106 | 0.088410 | 0.089286 | 0.986789 | 0.990194 |

| Virginia | 13 | 0.075362 | 0.074940 | 0.077381 | 0.973906 | 0.968460 |

| Indiana | 11 | 0.064064 | 0.063568 | 0.065476 | 0.978439 | 0.970862 |

| Wisconsin | 10 | 0.058073 | 0.057394 | 0.059524 | 0.975628 | 0.964219 |

| Colorado | 9 | 0.052133 | 0.051209 | 0.053571 | 0.973140 | 0.955892 |

| Iowa | 6 | 0.034692 | 0.033612 | 0.035714 | 0.971363 | 0.941142 |

| Nevada | 6 | 0.034692 | 0.033612 | 0.035714 | 0.971363 | 0.941142 |

| New Mexico | 5 | 0.028776 | 0.027715 | 0.029762 | 0.966885 | 0.931235 |

| Idaho | 4 | 0.022912 | 0.021963 | 0.023810 | 0.962300 | 0.922436 |

| New Hampshire | 4 | 0.022912 | 0.021963 | 0.023810 | 0.962300 | 0.922436 |

| Maine CD 2 | 1 | 0.005877 | 0.005621 | 0.005952 | 0.987357 | 0.944289 |

| Nebraska CD | 1 | 0.005877 | 0.005621 | 0.005952 | 0.987357 | 0.944289 |

and we see a similar result as for the Democrat version, an obvious difference though being that Michigan has decreased its relative power, at least as measured using the Shapley-Shubik index. \

Voting power (2): computation

Naive implementation of Banzhaf power indices

As we saw in the previous post, computation of the power indices can become unwieldy as the number of voters increases. However, we can very simply write a program to compute the Banzhaf power indices simply by looping over all subsets of the weights:

def banzhaf1(q,w):

n = len(w)

inds = [0]*n

P = [[]] # these next three lines creates the powerset of 0,1,...,(n-1)

for i in range(n):

P += [p+[i] for p in P]

for S in P[1:]:

T = [w[s] for s in S]

if sum(T) >= q:

for s in S:

T = [t for t in S if t != s]

if sum(w[j] for j in T)<q:

inds[s]+=1

return(inds)

And we can test it:

In [1]: q = 51; w = [49,49,2]

In [2]: banzhaf(q,w)

Out[2]: [2, 2, 2]

In [3]: banzhaf(12,[4,4,4,2,2,1])

Out[3]: [10, 10, 10, 6, 6, 0

The origin of the Banzhaf power indices was when John Banzhaf explored the fairness of a local voting system where six bodies had votes 9, 9, 7, 3, 1, 1 and a majority of 16 was required to pass any motion:

In [4]: banzhaf(16,[9,9,7,3,1,1])

Out[4]: [16, 16, 16, 0, 0, 0]

This result led Banzhaf to campaign against this system as being manifestly unfair.

Implementation using polynomials

In 1976, the eminent mathematical political theorist Steven Brams, along with Paul Affuso, in an article "Power and Size: A New Paradox" (in the Journal of Theory and Decision) showed how generating functions could be used effectively to compute the Banzhaf power indices.

For example, suppose we have

\[ [6; 4,3,2,2] \]

and we wish to determine the power of the first voter. We consider the formal polynomial

\[ q_1(x) = (1+x^3)(1+x^2)(1+x^2) = 1 + 2x^2 + x^3 + x^4 + 2x^5 + x^7. \]

The coefficient of \(x^j\) is the number of ways all the other voters can combine to form a weight sum equal to \(j\). For example, there are two ways voters can join to create a sum of 5: voters 2 and 3, or voters 2 and 4. But there is only one way to create a sum of 4: with voters 3 and 4.

Then the number of ways in which voter 1 will be necessary can be found by adding all the coefficients of \(x^{6-4}\) to \(x^5\). This gives a value p(1) = 6. In general, define

\[ q_i(x) = \prod_{j\ne i}(1-x^{w_j}) = a_0 + a_1x + a_2x^2 +\cdots + a_kx^k. \]

Then it is easily shown that

\[ p(i) = \sum_{j=q-w_i}^{q-1}a_j. \]

As another example, suppose we use this method to compute Luxembourg's power in the EEC:

\[ q_6(x) = (1+x^4)^3(1+x^2)^2 = 1 + 2x^2 + 4x^4 + 6x^6 + 6x^8 + 6x^{10} + 4x^{12} + 2x^{14} + x^{16} \]

and we find \(b(6)\) by adding the coefficients of \(x^{12-w_6}\) to \(x^{12-1}\), which produces zero.

This can be readily implemented in Python, using the sympy library for symbolic computation.

import sympy as sy

def banzhaf(q,w):

sy.var('x')

n = len(w)

inds = []

for i in range(n):

p = 1

for j in range(i):

p *= (1+x**w[j])

for j in range(i+1,n):

p *= (1+x**w[j])

p = p.expand()

inds += [sum(p.coeff(x,k) for k in range(q-w[i],q))]

return(inds)

Computation of Shapley-Shubik index

The use of permutations will clearly be too unwieldy. Even for say 15 voters, there are \(2^{15}=32768\) subsets, but \(1,307,674,368,000\) permutations, which is already too big for enumeration (except possibly on a very fast machine, or in parallel, or using a clever algorithm).

The use of polynomials for computations in fact precedes the work of Brams and Affuso; it was published by Irwin Mann and Lloyd Shapley in 1962, in a "memorandum" called Values of Large Games IV: Evaluating the Electoral College Exactly which happily you can find as a PDF file here.

Building on some previous work, they showed that the Shapley-Shubik index corresponding to voter \(i\), could be defined as

\[ \Phi_i=\sum_{k=0}^{n-1}\frac{k!(n-1-k)!}{n!}\sum_{j=q-w_i}^{q-1}c_{jk} \]

where \(c_{jk}\) is the coefficient of \(x^jy^k\) in the expansion of

\[ f_i(x,y)=\prod_{m\ne i}(1+x^{w_m}y). \]

This of course has many similarities to the polynomial definition of the Banzhaf power index, and can be computed similarly:

def shapley(q,w):

sy.var('x,y')

n = len(w)

inds = []

for i in range(n):

p = 1

for j in range(i):

p *= (1+y*x**w[j])

for j in range(i+1,n):

p *= (1+y*x**w[j])

p = p.expand()

B = []

for j in range(n):

pj = p.coeff(y,j)

B += [sum(pj.coeff(x,k) for k in range(q-w[i],q))]

inds += [sum(sy.Float(B[j]/sy.binomial(n,j)/(n-j)) for j in range(n))]

return(inds)

A few simple examples

The Australian (federal) Senate consists of 76 members, of which a simple majority is required to pass a bill. It is unusual for the current elected government (which will have a majority in the lower house: the House of Representatives) also to have a majority in the Senate. Thus it is quite possible for a party with small numbers to wield significant power.

A case in point is that of the "Australian Democrats" party, founded in 1977 by a disaffected ex-Liberal politician called Don Chipp, with the uniquely Australian slogan "Keep the Bastards Honest". For nearly two decades they were a vital force in Australian politics; they have pretty much lost all power they once had, although the party still exists.

Here's a little table showing the Senate composition in various years:

| Party | 1985 | 2000 | 2020 |

|---|---|---|---|

| Government | 34 | 35 | 36 |

| Opposition | 33 | 29 | 26 |

| Democrats | 7 | 9 | |

| Independent | 1 | 1 | 1 |

| Nuclear Disarmament | 1 | ||

| Greens | 1 | 9 | |

| One Nation | 1 | 2 | |

| Centre Alliance | 1 | ||

| Lambie Party | 1 |

This composition in 1985 can be described as

\[ [39; 34,33,7,1,1]. \]

And now:

In [1]: b = banzhaf(39,[34,33,7,1,1])

[sy.N(x/sum(b),4) for x in b]

Out[1]: [0.3333, 0.3333, 0.3333, 0, 0]

In [2]: s = shapley(39,[34,33,7,1,1])

[sy.N(x,4) for x in s]

Out[2]: [0.3333, 0.3333, 0.3333, 0, 0]

Here we see that both power indices give the same result: that the Democrats had equal power in the Senate to the two major parties, and the other two senate members had no power at all.

In 2000, we have \([39;35,29,9,1,1,1]\) and:

In [1]: b = banzhaf(39,[31,29,9,1,1,1])

[sy.N(x/sum(b),4) for x in b]

Out[1]: [0.34, 0.3, 0.3, 0.02, 0.02, 0.02]

In [2]: s = shapley(39,[31,29,9,1,1,1])

[sy.N(x,4) for x in s]

Out[2]: [0.35, 0.3, 0.3, 0.01667, 0.01667, 0.01667]

We see here that the two power indices give two slightly different results, but in each case the power of the Democrats was equal to that of the opposition, and this time the parties with single members had real (if small) power.

By 2020 the Democrats have disappeared as a political force, their place being more-or-less taken (at least numerically) by the Greens:

In [1]: b = banzhaf(39,[36,26,9,2,1,1,1]

[sy.N(x/sum(b),4) for x in b]

Out[1]: [0.5306, 0.1224, 0.1224, 0.102, 0.04082, 0.04082, 0.04082]

In [2]: s = shapley(39,[36,26,9,2,1,1,1]

[sy.N(x,4) for x in s]

Out[2]: [0.5191, 0.1357, 0.1357, 0.1024, 0.03571, 0.03571, 0.03571]

This shows a very different sort of power balance to previously: the Government has much more power in the Senate, partly to having close to a majority and partly because of the fracturing of other Senate members through a host of smaller parties. Note that the Greens, while having more members that the Democrats did in 1985, have far less power. Note also that One Nation, while only having twice as many members as the singleton parties, has far more power: 2.5 times by Banzhaf, 2.8667 times by Shapley-Shubik.

Voting power

After the 2020 American Presidential election, with the usual post-election analyses and (in this case) vast numbers of lawsuits, I started looking at the Electoral College, and trying to work out how it worked in terms of power. Although power is often conflated simply with the number of votes, that's not necessarily the case. We consider power as the ability of any state to affect the outcome of an election. Clearly a state with more votes: such as California with 55, will be more powerful than a state with fewer, for example Wyoming with 3. But often power is not directly correlated with size.

For example, imagine a version of America with just 3 states, Alpha, Beta, and Gamma, with electoral votes 49, 49, 2 respectively, and 51 votes needed to win.

The following table shows the ways that the states can join to reach (or exceed) that majority, and in each case which state is "necessary" for the win:

| Winning Coalitions | Votes won | Necessary States |

|---|---|---|

| Alpha, Beta | 98 | Alpha, Beta |

| Alpha, Gamma | 51 | Alpha, Gamma |

| Beta, Gamma | 51 | Beta, Gamma |

| Alpha, Beta, Gamma | 100 | No single state |

By "necessary states" we mean a state whose votes are necessary for the win. And in looking at that table, we see that in terms of influencing the vote, Gamma, with only 2 electors, is equally as powerful as the other two states.

To give another example, the Treaty Of Rome in the 1950's established the first version of the European Common Market, with six member states, each allocated a number of votes for decision making:

| Member | Votes | |

|---|---|---|

| 1 | France | 4 |

| 2 | West Germany | 4 |

| 3 | Italy | 4 |

| 4 | The Netherlands | 2 |

| 5 | Belgium | 2 |

| 6 | Luxembourg | 1 |

The treaty determined that a quota of 12 votes was needed to pass any resolution. At first this table might seem manifestly unfair: West Germany with a population of over 55 million compared with Luxembourg's roughly 1/3 of a million, thus with something like 160 times the population, West Germany got only 4 times the number of votes of Luxembourg.

But in fact it's even worse: since 12 votes are required to win, and all the other numbers of votes are even, there is no way that Luxembourg can influence any vote at all: its voting power was zero. If another state joined, also with a vote of 1, then it and Luxembourg together can influence a vote, and so Luxembourg's voting power would increase.

A power index is some numerical value attached to a weighted vote which describes its power in this sense. Although there are many such indices, there are two which are most widely used. The first was developed by Lloyd Shapley (who would win the Nobel Prize for Economics in 2012) and Martin Shubik in 1954; the second by John Banzhaf in 1965.

Basic definitions

First, some notation. In general we will have \(n\) voters each with a weight \(w_i\), and a quota \(q\) to be reached. For the American Electoral College, the voters are the states, the weights are the numbers of Electoral votes, and \(q\) is the number of votes required: 238. This is denoted as

\[ [q; w_1, w_2,\ldots,w_n]. \]

The three state example above is thus denoted

\[ [51; 49, 49, 2] \]

and the EEC votes as

\[ [12; 4,4,4,2,2,1]. \]

The Shapley-Shubik index

Suppose we have \(n\) votes with weights \(w_1\), \(w_2\) up to \(w_n\), and a quote \(q\) required. Consider all permutations of \(1,2, \ldots,n\). For each permutation, add up the weights starting at the left, and designate as the pivot voter the first voter who causes the cumulative sum to equal or exceed the quota. For each voter \(i\), let \(s_i\) be the number of times that voter has been chosen as a pivot. Then its power index is \(s_i/n!\). This means that the sum of all power indices is unity.

Consider the three state example above, where \(w_1=w_2=49\) and \(w_3=2\), and where we compute cumulative sums only up to reaching or exceeding the quota:

| Permutation | Cumulative sum of weights | Pivot Voter |

|---|---|---|

| 1 2 3 | 49, 98 | 2 |

| 1 3 2 | 49, 51 | 3 |

| 2 1 3 | 49, 98 | 1 |

| 2 3 1 | 49, 51 | 3 |

| 3 1 2 | 2, 51 | 1 |

| 3 2 1 | 2, 51 | 2 |

We see that \(s_1=s_2=s_3=2\) and so the Shapley-Shubik power indices are all \(1/3\).

The Banzhaf index

For the Banzhaf index, we consider the winning coalitions: these are any subset \(S\) of voters for which the sum of weights is not less than \(q\). It's convenient to define a function for this:

\[ v(S) = \begin{cases} 1 & \text{if } \sum_{i\in S}w_i\ge q \cr 0 & \text{otherwise} \end{cases} \]

A voter \(i\) is necessary for a winning coalition \(S\) if \(S-\{i\}\) is not a winning coalition; that is, if \(v(S)-v(S-\{i\})=1\). If we define

\[ p(i) =\sum_S v(S)-v(s-\{i\}) \]

then \(b(i)\) is a measure of power, and the (normalized) Banzhaf power indices are defined as

\[ b(i) = \frac{p(i)}{\sum_i p(i)} \]

so that the sum of all indices (as for the Shapley-Shubik index) is again unity.

Considering the first table above, we see that \(p(1)=p(2)=p(3)=2\) and the Banzhf power indices are all \(1/3\). For this example the Banzhaf and Shapley-Shubik values agree. This is not always the case.

For the EEC example, the winning coalitions are, with necessary voters:

| Winning Coalition | Votes | Necessary voters |

|---|---|---|

| 1,2,3 | 12 | 1,2,3 |

| 1,2,4,5 | 12 | 1,2,4,5 |

| 1,3,4,5 | 12 | 1,3,4,5 |

| 2,3,4,5 | 12 | 2,3,4,5 |

| 1,2,3,6 | 13 | 1,2,3 |

| 1,2,4,5,6 | 13 | 1,2,4,5 |

| 1,3,4,5,6 | 13 | 1,3,4,5 |

| 2,3,4,5,6 | 13 | 2,3,4,5 |

| 1,2,3,4 | 14 | 1,2,3 |

| 1,2,3,5 | 14 | 1,2,3 |

| 1,2,3,4,6 | 15 | 1,2,3 |

| 1,2,3,5,6 | 15 | 1,2,3 |

| 1,2,3,4,5 | 16 | No single voter |

| 1,2,3,4,5,6 | 17 | No single voter |

Counting up the number of times each voter appears in the rightmost column, we see that

\[ p(1) = p(2) = p(3) = 10,\quad p(4) = p(5) = 6,\quad p(6) = 0 \]

and so

\[ b(1) = b(2) = b(3) = \frac{5}{21},\quad b(4) = b(5) = \frac{1}{7}. \]

Note that the power of the three biggest states is in fact only 5/3 times that of the smaller states, in spite of having twice as many votes. This is a striking example of how power is not proportional to voting weight.

Note that computing the Shapley-Shubik index could be unwieldy; there are

\[ \frac{6!}{3!2!} = 60 \]

different permutations of the weights, and clearly as the number of weights increases, possibly with very few repetitions, the number of permutations will be excessive. For the Electoral College, with 51 members, and a few states with the same numbers of voters, the total number of permutations will be

\[ \frac{51!}{(2!)^4(3!)^3(4!)(5!)(6!)(8!)} = 5368164393879631593058456306349344975896576000000000 \]

which is clearly far too large for enumeration. But as we shall see, there are other methods.

Electing a president

Every four years (barring death or some other catastrophe), the USA goes through the periodic madness of a presidential election. Wild behaviour, inaccuracies, mud-slinging from both sides have been central since George Washington's second term. And the entire business of voting is muddied by the Electoral College, the 538 members of which do the actual voting: the public, in their own voting, merely instruct the College what to do. Although it has been said that the EC "magnifies" the popular vote, this is not always the case, and quite often a president will be elected with a majority (270 or more) of Electoral College votes, in spite of losing the popular vote. This dichotomy encourages periodic calls for the College to be disbanded.

As you probably know, each of the 50 states and the District of Columbia has Electors allocated to it, roughly proportional to population. Thus California, the most populous state, has 55 electors, and several smaller states (and DC) only 3.

In all states except Maine and Nebraska, the votes are allocated on a "winner takes all" principle: that is, all the Electoral votes will be allocated to whichever candidate has obtained a plurality in that state. For only two candidates then, if a states' voters produce a simple majority of votes for one of them, that candidate gets all the EC votes.

Maine and Nebraska however, allocate their EC votes by congressional district. In each state, 2 EC votes are allocated to the winner of the popular vote in the state, and for each congressional district (2 in Maine, 3 in Nebraska), the other votes are allocated to the winner in that district.

It's been a bit of a mathematical game to determine the theoretical lowest bound on a popular vote for a president to be elected. To show how this works, imagine a miniature system with four states and 14 electoral college votes:

| State | Population | Electors |

|---|---|---|

| Abell | 100 | 3 |

| Bisly | 100 | 3 |

| Champ | 120 | 4 |

| Dairy | 120 | 4 |

Operating on the winner takes all principle in each state, 8 EC votes are required for a win. Suppose that in each state, the votes are cast as follows, for the candidates Mr Man and Mr Guy:

| State | Mr Man | Mr Guy | EC Votes to Man | EC Votes to Guy |

|---|---|---|---|---|

| Abell | 0 | 100 | 0 | 3 |

| Bisly | 0 | 100 | 0 | 3 |

| Champ | 61 | 59 | 4 | 0 |

| Dairy | 61 | 59 | 4 | 0 |

| Total | 122 | 310 | 8 | 6 |

and Mr Man wins with 8 EC votes but only about 27.3% of the popular vote. Now you might reasonably argue that this situation would never occur in practice, and probably you're right. But extreme examples such as this are used to show up inadequacies in voting systems. And sometimes very strange things do happen.

So: what is the smallest percentage of the popular vote under which a president could be elected? To experiment, we need to know the number of registered voters in each state (and it appears that the percentage of eligible citizens enrolled to vote differs markedly between the states), and the numbers of electors. The first I ran to ground here and the few states not accounted for I found information on their Attorney Generals' sites. The one state for which I couldn't find statistics was Illinois, so I used the number 7.8 million, which has been bandied about on a few news sites. The numbers of electors per state is easy to find, for example on the wikipedia page.

I make the following simplifying assumptions: all registered voters will vote; and all states operate on a winner takes all principle. Thus, for simplicity, I am not using the apportionment scheme of Maine and Nebraska. (I suspect that taking this into account wouldn't effect the result much anyway.)

Suppose that the registered voting population of each state (including DC) is \(v_i\) and the number of EC votes is \(c_i\). For any state, either the winner will be chosen by a bare majority, or all the votes will go to the loser. This becomes then a simple integer programming problem; in fact a knapsack problem. For each state, define

\[ m_i = \lfloor v_i/2\rfloor +1 \]

for the majority votes needed.

We want to minimize

\[ V = \sum_{i=1}^{51}x_im_i \]

subject to the constraint

\[ \sum_{k=1}^{51}c_ix_i \ge 270 \]

and each \(x_i\) is zero or one.

Now all we need to is set up this problem in a suitable system and solve it! I chose Julia and its JuMP modelling language, and for actually doing the dirty work, GLPK. JuMP in fact can be used with pretty much any optimisation software available, including commercial systems.

using JuMP, GLPK

states = ["Alabama","Alaska","Arizona","Arkansas","California","Colorado","Connecticut","Delaware","DC","Florida","Georgia","Hawaii",

"Idaho","llinois","Indiana","Iowa","Kansas","Kentucky","Louisiana","Maine","Maryland","Massachusetts","Michigan","Minnesota",

"Mississippi","Missouri","Montana","Nebraska","Nevada","New Hampshire","New Jersey","New Mexico","New York","North Carolina",

"North Dakota","Ohio","Oklahoma","Oregon","Pennsylvania","Rhode Island","South Carolina","South Dakota","Tennessee","Texas","Utah",

"Vermont","Virginia","Washington","West Virginia","Wisconsin","Wyoming"]

reg_voters = [3560686,597319,4281152,1755775,22047448,4238513,2375537,738563,504043,14065627,7233584,795248,1010984,7800000,4585024,

2245092,1851397,3565428,3091340,1063383,4141498,4812909,8127040,3588563,2262810,4213092,696292,1252089,1821356,913726,6486299,

1350181,13555547,6838231,540302,8080050,2259113,2924292,9091371,809821,3486879,578666,3931248,16211198,1857861,495267,5975696,

4861482,1268460,3684726,268837]

majorities = [Int(floor(x/2+1)) for x in reg_voters]

ec_votes = [9,3,11,6,55,9,7,3,3,29,16,4,4,20,11,6,6,8,8,4,10,11,16,10,6,10,3,5,6,4,14,5,29,15,3,18,7,7,20,4,9,3,11,38,6,3,13,12,5,10,3]

potus = Model(GLPK.Optimizer)

@variable(potus, x[i=1:51], Bin)

@constraint(potus, sum(ec_votes .* x) >= 270)

@objective(potus, Min, sum(majorities .* x));

Solving the problem is now easy:

optimize!(potus)

Now let's see what we've got:

vx = value.(x)

sum(ec_votes .* x)

270

votes = Int(objective_value(potus))

46146767

votes*100/sum(reg_voters)

21.584985938021866

and we see we have elected a president with slightly less than 21.6% of the popular vote.

Digging a little further, we first find the states in which a bare majority voted for the winner:

f = findall(x -> x == 1.0, vx)

for i in f

print(states[i],", ")

end

Alabama, Alaska, Arizona, Arkansas, California, Connecticut, Delaware, DC, Hawaii, Idaho,

llinois, Indiana, Iowa, Kansas, Louisiana, Maine, Minnesota, Mississippi, Montana, Nebraska,

Nevada, New Hampshire, New Mexico, North Dakota, Oklahoma, Oregon, Rhode Island, South Carolina,

South Dakota, Tennessee, Utah, Vermont, West Virginia, Wisconsin, Wyoming,

and the other states, in which every voter voted for the loser:

nf = findall(x -> x == 0.0, vx)

for i in nf

print(states[i],", ")

end

Colorado, Florida, Georgia, Kentucky, Maryland, Massachusetts, Michigan,

Missouri, New Jersey, New York, North Carolina, Ohio, Pennsylvania, Texas,

Virginia, Washington,

In point of history, the election in which the president-elect did worst was in 1824, when John Quincy Adams was elected over Andrew Jackson; this was in fact a four-way contest, and the decision was in the end made by the House of Representatives, who elected Adams by one vote. And Jackson, never one to neglect an opportunity for vindictiveness, vowed that he would destroy Adams's presidency, which he did.

More recently, since the Electoral College has sat at 538 members, in 2000 George W. Bush won in spite of losing the popular vote by 0.51%, and in 2016 Donald Trump won in spite of losing the popular vote by 2.09%.

Plenty of numbers can be found on wikipedia and elsewhere.

Enumerating the rationals

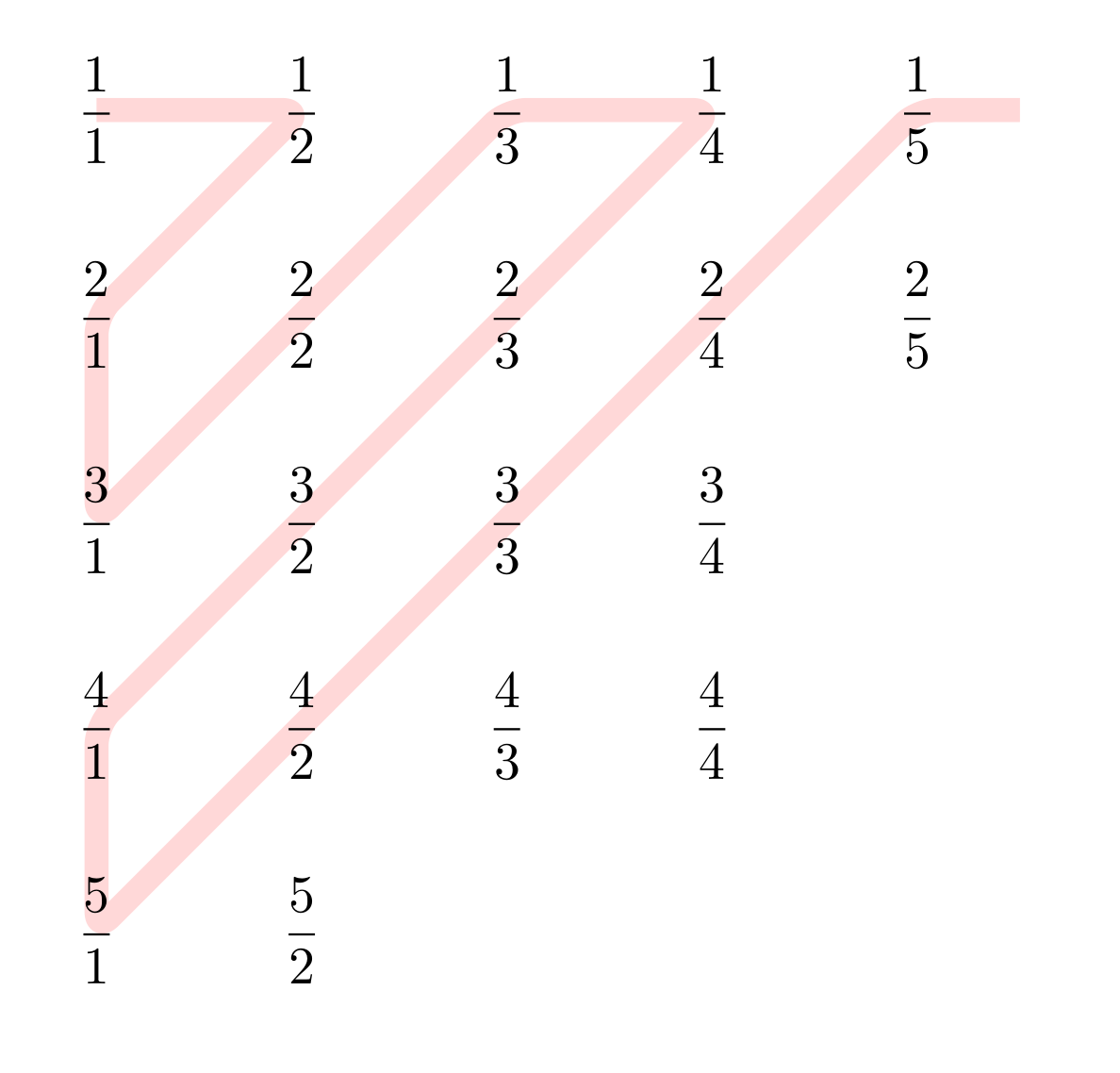

The rational numbers are well known to be countable, and one standard method of counting them is to put the positive rationals into an infinite matrix \(M=m_{ij}\), where \(m_{ij}=i/j\) so that you end up with something that looks like this:

\[ \left[\begin{array}{ccccc} \frac{1}{1}&\frac{1}{2}&\frac{1}{3}&\frac{1}{4}&\dots\\[1ex] \frac{2}{1}&\frac{2}{2}&\frac{2}{3}&\frac{2}{4}&\dots\\[1ex] \frac{3}{1}&\frac{3}{2}&\frac{3}{3}&\frac{3}{4}&\dots\\[1ex] \frac{4}{1}&\frac{4}{2}&\frac{4}{3}&\frac{4}{4}&\dots\\[1ex] \vdots&\vdots&\vdots&\vdots&\ddots \end{array}\right] \]

It is clear that not only will each positive rational appear somewhere in this matrix, but its value will appear an infinite number of times. For example \(2 / 3\) will appear also as \(4 / 6\), as \(6 / 9\) and so on.

Then we can enumerate all the elements of this matrix by traversing all the SW--NE diagonals:

This provides an enumeration of all the positive rationals: \[ \frac{1}{1}, \frac{1}{2}, \frac{2}{1}, \frac{3}{1}, \frac{2}{2}, \frac{1}{3}, \frac{1}{4}, \frac{2}{3},\ldots \] To enumerate all rationals (positive and negative), we simply place the negative of each value immediately after it: \[ \frac{1}{1}, -\frac{1}{1}, \frac{1}{2}, -\frac{1}{2}, \frac{2}{1}, -\frac{2}{1}, \frac{3}{1}, -\frac{3}{1}, \frac{2}{2}, -\frac{2}{2}, \frac{1}{3}, -\frac{1}{3}, \frac{1}{4}, \frac{1}{4}, \frac{2}{3}, -\frac{2}{3}\ldots \] This is all standard, well-known stuff, and as far as countability goes, pretty trivial.

One might reasonably ask: is there a way of enumerating all rationals in such a way that no rational is repeated, and that every rational appears naturally in its lowest form?

Indeed there is; in fact there are several, of which one of the newest, most elegant, and simplest, is using the Calkin-Wilf tree. This is named for its discoverers (or creators, depending on which philosophy of mathematics you espouse), who described it in an article happily available on the archived web site of the second author. Herbert Wilf died in 2012, but the Mathematics Department at the University of Pennsylvania have maintained the page as he left it, as an online memorial to him.

The Calkin-Wilf tree is a binary tree with root \(a / b = 1 / 1\). From each node \(a / b\) the left child is \(a / (a+b)\) and the right child is \((a+b) / b\). From each node \(a / b\), the path back to the root contains the fractions which encode, as it were, the Euclidean algorithm for determining the greatest common divisor of \(a\) and \(b\). It is not hard to show that every fraction in the tree is in its lowest terms, and appears only once; also that every rational appears in the tree.

The enumeration of the rationals can thus be made by a breadth-first transversal of the tree; in other words listing each level of the tree one after the other:

\[ \underbrace{\frac{1}{1}}_{\text{The root}},; \underbrace{\frac{1}{2},; \frac{2}{1}}_{\text{first level}},; \underbrace{\frac{1}{3},; \frac{3}{2},; \frac{2}{3},; \frac{3}{1}}_{\text{second level}},; \underbrace{\frac{1}{4},; \frac{4}{3},; \frac{3}{5},; \frac{5}{2},; \frac{2}{5},; \frac{5}{3},; \frac{3}{4},; \frac{4}{1}}_{\text{third level}};\ldots \]

Note that the denominator of each fraction is the numerator of its successor (again, this is not hard to prove in general); thus given the sequence

\[ b_i=0,1,1,2,1,3,2,3,1,4,3,5,2,5,3,4,\ldots \]

(indexed from zero), the rationals are enumerated by \(b_i/b_{i+1}\). This sequence pre-dates Calkin and Wilf; is goes back to an older enumeration now called the Stern-Brocot tree named for the mathematician Moritz Stern and the clock-maker Achille Brocot (who was investigating gear ratios), who discovered this tree independently in the early 1860's.

The sequence \(b_i\) is called Stern's diatomic sequence and can be generated recursively:

\[ b_i=\left\{\begin{array}{ll} i,&\text{if $i\le 1$}\\\ b_{i/2},&\text{if $i$ is even}\\\ b_{(i-1)/2}+b_{(i+1)/2},&\text{if $i$ is odd} \end{array} \right. \]

Alternatively: \[ b_0=0,;b_1=1,;b_{2i}=b_i,b_{2i+1}=b_i+b_{i+1}\text{ for }i\ge 1. \] This is the form in which it appears as sequence 2487 in the OEIS.

So we can generate Stern's diatomic sequence \(b_i\), and then the successive fractions \(b_i/b_{i+1}\) will generate each rational exactly once.

If that isn't remarkable enough, sometime prior to 2003, Moshe Newman showed that the Calkin-Wilf enumeration of the rationals can in fact be done directly: \[ x_0 = 1,\quad x_{i+1}=\frac{1}{2\lfloor x_i\rfloor -x_i +1};\text{for};i\ge 1 \] will generate all the rationals. I can't find anything at all about Moshe Newman; he is always just mentioned as having "shown" this result. Never where, or to whom. There is a proof for this in an article "New Looks at Old Number Theory" by Aimeric Malter, Dierk Schleicher and Don Zagier, published in The American Mathematical Monthly , Vol. 120, No. 3 (March 2013), pp. 243-264. The part of the article relating to enumeration of rationals is based on a prize-winning mathematical essay by the first author (who at the time was a high school student in Bremen, Germany), when he was only 13. Here is the skeleton of Malter's proof:

If \(x\) is any node, then its left and right hand children are \(L = x / (x+1)\) and \(R = 1+x = 1 / (1-L)\) respectively. And clearly \(R = 1/(2\lfloor L\rfloor -L +1)\). Suppose now that \(A\) is a right child, and \(B\) is its successor rational. Then \(A\) and \(B\) will have a common ancestor \(z=p/q\), say \(k\) generations ago. To get from \(z\) to \(A\) will require one left step and \(k-1\) right steps. It is easy to show (by induction if you like), that

\[ A = k-1+\frac{p}{p+q} \] and for its successor \(B\), obtained by one right step from \(z\) and \(k-1\) left steps: \[ B = \frac{1}{\frac{q}{p+q}+k-1}. \] Since \(k-1=\lfloor A\rfloor\), and since \[ \frac{p}{p+q} = A-\lfloor A\rfloor \] it follows that \[ B=\frac{1}{1-(A-\lfloor A\rfloor))+\lfloor A\rfloor}=\frac{1}{2\lfloor A\rfloor-A+1}. \] The remaining case is moving from the end of one row to the beginning of the next, that is, from \(n\) to \(1 / (n+1)\). And this is trivial.

What's more, we can write down the isomorphisms between this sequence of positive rationals and in positive integers. Define \(N:\Bbb{Q}\to\Bbb{Z}\) as follows:

\[ N(p/q)=\left\{\begin{array}{ll} 1,&\text{if $p=q$}\\\ 2 N(p/(q-p)),&\text{if $p\lt q$}\\\ 2 N((p-q)/q)+1,&\text{if $p\gt q$} \end{array} \right. \]

Without going through a formal proof, what this does is simply count the number of steps taken to perform the Euclidean algorithm on \(p\) and \(q\). The extra factors of 2 ensure that rationals in level \(k\) have values between \(2^k\) and \(2^{k+1}\), and the final "\(+1\)" differentiates left and right children. This function assumes that \(p\) and \(q\) are relatively prime; that is, that the fraction \(p/q\) is in its lowest terms.

(The isomorphism in the other direction is given by \(k\mapsto b_k/b_{k+1}\) where \(b_k\) are the elements of Stern's diatomic sequence discussed above.)

This is just the sort of mathematics I like: simple, but surprising, and with depth. What's not to like?

Fitting the SIR model of disease to data in Julia

A few posts ago I showed how to do this in Python. Now it's Julia's turn. The data is the same: spread of influenza in a British boarding school with a population of 762. This was reported in the British Medical Journal on March 4, 1978, and you can read the original short article here.

As before we use the SIR model, with equations

\begin{align*} \frac{dS}{dt}&=-\frac{\beta IS}{N}\\\ \frac{dI}{dt}&=\frac{\beta IS}{N}-\gamma I\\\ \frac{dR}{dt}&=\gamma I \end{align*}

where \(S\), \(I\), and \(R\) are the numbers of susceptible, infected, and recovered people. This model assumes a constant population - so no births or deaths - and that once recovered, a person is immune. There are more complex models which include a changing population, as well as other disease dynamics.

The above equations can be written without the population; since it is constant, we can just write \(\beta\) instead of \(\beta/N\). The values \(\beta\) and \(\gamma\) are the parameters which affect the working of this model, their values and that of their ratio \(\beta/\gamma\) provide information of the speed of the disease spread.

As with Python, our interest will be to see if we can find values of \(\beta\) and \(\gamma\) which model the school outbreak.

We will do this in three functions. The first sets up the differential equations:

using DifferentialEquations

function SIR!(du,u,p,t)

S,I,R = u

β,γ = p

du[1] = dS = -β*I*S

du[2] = dI = β*I*S - γ*I

du[3] = dR = γ*I

end

The next function determines the sum of squares between the data and the results of the SIR computations for given values of the parameters. Since we will put all our functions into one file, we can create the constant values outside any functions which might need them:

data = [1, 3, 6, 25, 73, 222, 294, 258, 237, 191, 125, 69, 27, 11, 4]

tspan = (0.0,14.0)

u0 = [762.0,1.0,0.0]

function ss(x)

prob = ODEProblem(SIR!,u0,tspan,(x[1],x[2]))

sol = solve(prob)

sol_data = sol(0:14)[2,:]

return(sum((sol_data - data) .^2))

end

Note that we don't have to carefully set up the problem to produce values at

each of the data points, which in our case are the integers from 0 to 14. Julia

will use a standard numerical technique with a dynamic step size, and values

corresponding to the data points can then be found by interpolation. All of

this functionality is provided by the DifferentialEquations package. For

example, R[10] will return the 10th value of the list of computed R values,

but R(10) will produce the interpolated value of R at \(t=10\).

Finally we use the Optim package to minimize the sum of squares, and the

Plots package to plot the result:

using Optim

using Plots

function run_optim()

opt = optimize(ss,[0.001,0.01],NelderMead())

beta,gamma = opt.minimizer

prob = ODEProblem(SIR!,u0,tspan,(beta,gamma))

sol = solve(prob)

plot(sol,

linewidth=2,

xaxis="Time in days",

label=["Susceptible" "Infected" "Recovered"])

plot!([0:14],data,linestyle=:dash,marker=:circle,markersize=4,label="Data")

end

Running the last function will produce values

\begin{align*} \beta&=0.0021806887934782853\\\ \gamma&=0.4452595474326912 \end{align*}

and the final plot looks like this:

This was at least as easy as in Python, and with a few extra bells and whistles, such as interpolation of data points. Nice!

The Butera-Pernici algorithm (2)

The purpose of this post will be to see if we can implement the algorithm in Julia, and thus leverage Julia's very fast execution time.

We are working with polynomials defined on nilpotent variables, which means that

the degree of any generator in a polynomial term will be 0 or 1. Assume that

our generators are indexed from zero: \(x_0,x_1,\ldots,x_{n-1}\), then any term in a

polynomial will have the form

\[

cx_{i_1}x_{i_2}\cdots x_{i_k}

\]

where \(\{x_{i_1}, x_{i_2},\ldots, x_{i_k}\}\subseteq\{0,1,2,\ldots,n-1\}\). We can

then express this term as an element of a dictionary {k => v} where

\[

k = 2^{i_1}+2^{i_2}+\cdots+2^{i_k}.

\]

So, for example, the polynomial term \(7x_2x_3x_5\) would correspond to the dictionary term

44 => 7

since \(44 = 2^2+2^3+2^5\). Two polynomial terms {k1 => v1} and {k2 => v2}

with no variables in common can then be multiplied simply by adding the k terms,

and multiplying the v values, to obtain {k1+k2 => v1*v2} . And we can check if

k1 and k2 have a common variable easily by evaluating k1 & k2; a non-zero

value indicates a common variable. This leads to the following Julia function

for multiplying two such dictionaries:

function poly_dict_mul(p1, p2)

p3 = Dict{BigInt,BigInt}()

for (k1, v1) in p1

for (k2, v2) in p2

if k1 & k2 > 0

continue

else

if k1 + k2 in keys(p3)

p3[k1+k2] += v1 * v2

else

p3[k1+k2] = v1 * v2

end

end

end

end

return (p3)

end

As you see, this is a simple double loop over the terms in each polynomial

dictionary. If two terms have a non-zero conjunction, we simply move on. If

two terms when added already exist in the new dictionary, we add to that term.

If the sum of terms is new, we create a new dictionary element. The use of

BigInt is to ensure that no matter how big the terms and coefficients become,

we don't suffer from arithmetic overflow.

For example, suppose we consider the product \[ (x_0+x_1+x_2)(x_1+x_2+x_3). \] A straightforward expansion produces \[ x_0x_3 + x_1x_3 + x_1^2 + x_0x_1 + x_0x_2 + 2x_1x_2 + x_2^2 + x_2x_3. \] which by nilpotency becomes \[ x_0x_3 + x_1x_3 + x_0x_1 + x_0x_2 + 2x_1x_2 + x_2x_3. \] The dictionaries corresponding to the two polynomials are

{1 => 1, 2 => 1, 4 => 1}

and

{2 => 1, 4 => 1, 8 => 1}

Then:

julia> poly_dict_mul(Dict(1=>1,2=>1,4=>1),Dict(2=>1,4=>1,8=>1))

Dict{BigInt,BigInt} with 6 entries:

9 => 1

10 => 1

3 => 1

5 => 1

6 => 2

12 => 1

If we were to rewrite the keys as binary numbers, we would have

{1001 => 1, 1010 => 1, 11 => 1, 101 => 1, 110 => 2, 1100 => 1}

in which you can see that each term corresponds with the term of the product above.

Having conquered multiplication, finding the permanent should then require two steps:

- Turning each row of the matrix into a polynomial dictionary.

- Starting with \(p=1\), multiply all rows together, one at a time.

For step 1, suppose we have a row \(i\) of a matrix \(M=m_{ij}\). Then starting

with an empty dictionary p, we move along the row, and for each non-zero element

\(m_{ij}\) we add the term p[BigInt(1)<<j] = M[i,j]. For speed we use bit

operations instead of arithmetic operations. This means we can create a list of

all polynomial dictionaries:

function mat_polys(M)

(n,ncols) = size(M)

ps = []

for i in 1:n

p = Dict{BigInt,BigInt}()

for j in 1:n

if M[i,j] == 0

continue

else

p[BigInt(1)<<(j-1)] = M[i,j]

end

end

push!(ps,p)

end

return(ps)

end

Step 2 is a simple loop; the permanent will be given as the value in the final step:

function poly_perm(M)

(n,ncols) = size(M)

mp = mat_polys(M)

p = Dict{BigInt,BigInt}(0=>1)

for i in 1:n

p = poly_dict_mul(p,mp[i])

end

return(collect(values(p))[1])

end

We don't in fact need two separate functions here; since the polynomial dictionary for each row is only used once, we could simply create each one as we needed. However, given that none of our matrices will be too large, the saving of time and space would be minimal.

Now for a few tests:

julia> n = 10; M = [BigInt(1)*mod(j-i,n) in [1,2,3] for i = 1:n, j = 1:n);

julia> poly_perm(M)

125

julia> n = 20; M = [BigInt(1)*mod(j-i,n) in [1,2,3] for i = 1:n, j = 1:n);

julia> @time poly_perm(M)

0.003214 seconds (30.65 k allocations: 690.875 KiB)

15129

julia> n = 40; M = [BigInt(1)*mod(j-i,n) in [1,2,3] for i = 1:n, j = 1:n);

julia> @time poly_perm(M)

0.014794 seconds (234.01 k allocations: 5.046 MiB)

228826129

julia> n = 100; M = [BigInt(1)*mod(j-i,n) in [1,2,3] for i = 1:n, j = 1:n);

julia> @time poly_perm(M)

0.454841 seconds (3.84 M allocations: 83.730 MiB, 27.98% gc time)

792070839848372253129

julia> lucasnum(n)+2

792070839848372253129

This is extraordinarily fast, especially compared with our previous attempts: naive attempts using all permutations, and using Ryser's algorithm.

- A few comparisons

Over the previous blog posts, we have explored various different methods of

computing the permanent:

- `permanent`, which is the most naive method, using the formal definition, and

summing over all the permutations \\(S\_n\\).

- `perm1`, Ryser's algorithm, using the Combinatorics package and iterating over

all non-empty subsets of \\(\\{1,2,\ldots,n\\}\\).

- `perm2`, Same as `perm1` but instead of using subsets, we use all non-zero

binary vectors of length n.

- `perm3`, Ryser's algorithm using Gray codes to speed the transition between

subsets, and using a lookup table.

All these are completely general, and aside from the first function, which is

the most inefficient, can be used for any matrix up to size about \\(25\times 25\\).

So consider the \\(n\times n\\) circulant matrix with three ones in each row, whose

permanent is \\(L(n)+2\\). The following table shows times in seconds (except where

minutes is used) for each calculation:

<style>.table-nocaption table { width: 75%; text-align: left; }</style>

<div class="ox-hugo-table table-nocaption">

<div></div>

| | 10 | 12 | 15 | 20 | 30 | 40 | 60 | 100 |

|-------------|--------|-------|-------|-------|-------|------|------|------|

| `permanent` | 9.3 | - | - | - | - | - | - | - |

| `perm1` | 0.014 | 0.18 | 0.72 | 47 | - | - | - | - |

| `perm2` | 0.03 | 0.105 | 2.63 | 166 | - | - | - | - |

| `perm3` | 0.004 | 0.016 | 0.15 | 12.4 | - | - | - | - |

| `poly_perm` | 0.0008 | 0.004 | 0.001 | 0.009 | 0.008 | 0.02 | 0.05 | 0.18 |

</div>

Assuming that the time taken for `permanent` is roughly proportional to \\(n!n\\),

then we would expect that the time for matrices of sizes 23 and 24 would be

about \\(1.5\times 10^{17}\\) and \\(3.8\times 10^{18}\\) seconds respectively. Note

that the age of the universe is approximately \\(4.32\times 10^{17}\\) seconds, so

my laptop would need to run for about the third of the universe's age to compute

the permanent of a \\(23\times 23\\) matrix. That's about the time since the solar

system and the Earth were formed.

Note also that `poly_perm` will slow down if the number of non-zero values in

each row increases. For example, with four consecutive ones in each row, it

takes over 10 seconds for a \\(100\times 100\\) matrix. With five ones in each row,

it takes about 2.7 and 21.6 seconds respectively for matrices of size 40 and 60.

Extrapolating indicates that it would take about 250 seconds for the \\(100\times

100\\) matrix. In general, an \\(n\times n\\) matrix with \\(k\\) non-zero elements in

each row will have a time complexity approximately of order \\(n^k\\). However,

including the extra optimization (which we haven't done) that allows for

elements to be set to one before the multiplication, produces an algorithm whose

complexity is \\(O(2^{\text{min}(2w,n)}(w+1)n^2)\\) where \\(n\\) is the size of the

matrix, and \\(w\\) its band-width. See the [original

paper](<https://arxiv.org/abs/1406.5337>) for details.

The Butera-Pernici algorithm (1)

Introduction

We know that there is no general sub-exponential algorithm for computing the permanent of a square matrix. But we may very reasonably ask -- might there be a faster, possibly even polynomial-time algorithm, for some specific classes of matrices? For example, a sparse matrix will have most terms of the permanent zero -- can this be somehow leveraged for a better algorithm?

The answer seems to be a qualified "yes". In particular, if a matrix is banded, so that most diagonals are zero, then a very fast algorithm can be applied. This algorithm is described in an online article by Paolo Butera and Mario Pernici called Sums of permanental minors using Grassmann algebra. Accompanying software (Python programs) is available at github. This software has been rewritten for the SageMath system, and you can read about it in the documentation. The algorithm as described by Butera and Pernici, and as implemented in Sage, actually produces a generating function.

Our intention here is to investigate a simpler version, which computes the permanent only.

Basic outline

Let \(M\) be an \(n\times n\) square matrix, and consider the polynomial ring on \(n\) variables \(x_1,x_2,\ldots,x_n\). Each row of the matrix will correspond to an element of this ring; in particular row \(i\) will correspond to

\[ \sum_{j=1}^nm_{ij}a_j=m_{i1}a_1+m_{i2}a_2+\cdots+m_{in}a_n. \]

Suppose further that all the generating elements \(x_i\) are nilpotent of order two, so that \(x_i^2=0\).

Now if we take all the row polynomials and multiply them, each term of the product will have order \(n\). But by nilpotency, all terms which contain a repeated element will vanish. The result will be only those terms which contain each generator exactly once, of which there will be \(n!\). To obtain the permanent all that is required is to set \(x_i=1\) for each generator.

Here's an example in Sage.

sage: R.<a,b,c,d,e,f,g,h,i,x1,x2,x3> = PolynomialRing(QQbar)

sage: M = matrix([[a,b,c],[d,e,f],[g,h,i]])

sage: X = matrix([[x1],[x2],[x3]])

sage: MX = M*X

[a*x1 + b*x2 + c*x3]

[d*x1 + e*x2 + f*x3]

[g*x1 + h*x2 + i*x3]

To implement nilpotency, it's easiest to reduce modulo the ideal defined by \(x_i^2=0\) for all \(i\). So we take the product of those row elements, and reduce:

sage: I = R.ideal([x1^2, x2^2, x3^2])

sage: pr = MX[0,0]*MX[1,0]*MX[2,0]

sage: pr.reduce(I)

c*e*g*x1*x2*x3 + b*f*g*x1*x2*x3 + c*d*h*x1*x2*x3 + a*f*h*x1*x2*x3 + b*d*i*x1*x2*x3 + a*e*i*x1*x2*x3

Finally, set each generator equal to 1:

sage: pr.reduce(I).subs({x1:1, x2:1, x3:1})

c*e*g + b*f*g + c*d*h + a*f*h + b*d*i + a*e*i

and this is indeed the permanent for a general \(3\times 3\) matrix.

Some experiments

Let's experiment now with the matrices we've seen in a previous post, which contain three consecutive super-diagonals of ones, and the rest zero.

Such a matrix is easy to set up in Sage:

sage: n = 10

sage: v = n*[0]; v[1:4] = [1,1,1]

sage: M = matrix.circulant(v)

sage: M

[0 1 1 1 0 0 0 0 0 0]

[0 0 1 1 1 0 0 0 0 0]

[0 0 0 1 1 1 0 0 0 0]

[0 0 0 0 1 1 1 0 0 0]

[0 0 0 0 0 1 1 1 0 0]

[0 0 0 0 0 0 1 1 1 0]

[0 0 0 0 0 0 0 1 1 1]

[1 0 0 0 0 0 0 0 1 1]

[1 1 0 0 0 0 0 0 0 1]

[1 1 1 0 0 0 0 0 0 0]

Similarly we can define the polynomial ring:

sage: R = PolynomialRing(QQbar,x,n)

sage: R.inject_variables()

Defining x0, x1, x2, x3, x4, x5, x6, x7, x8, x9

And now the polynomials corresponding to the rows:

sage: MX = M*matrix(R.gens()).transpose()

sage: MX

[x1 + x2 + x3]

[x2 + x3 + x4]

[x3 + x4 + x5]

[x4 + x5 + x6]

[x5 + x6 + x7]

[x6 + x7 + x8]

[x7 + x8 + x9]

[x0 + x8 + x9]

[x0 + x1 + x9]

[x0 + x1 + x2]

If we multiply them, we will end up with a huge expression, far too long to display:

sage: pr = prod(MX[i,0] for i in range(n))

sage: len(pr.monomials)

14103

We could reduce this by the ideal, but that would be slow. Far better to reduce after each separate multiplication:

sage: I = R.ideal([v^2 for v in R.gens()])

sage: p = R.one()

sage: for i in range(n):

p = p*MX[i,0]

p = p.reduce(I)

sage: p.subs({v:1 for v in R.gens())

125

The answer is almost instantaneous. We can repeat the above list of commands

starting with different values of n; for example with n=20 the result is 15129,

as we expect.

This is not yet optimal; for n=20 on my machine the final loop takes about 7.8 seconds. Butera and Pernici show that the multiplication and setting the variables to one can sometimes be done in the opposite order; that is, some variables can be identified to be set to one before the multiplication. This can speed the entire loop dramatically, and this optimization has been included in the Sage implementation. For details, see their paper.

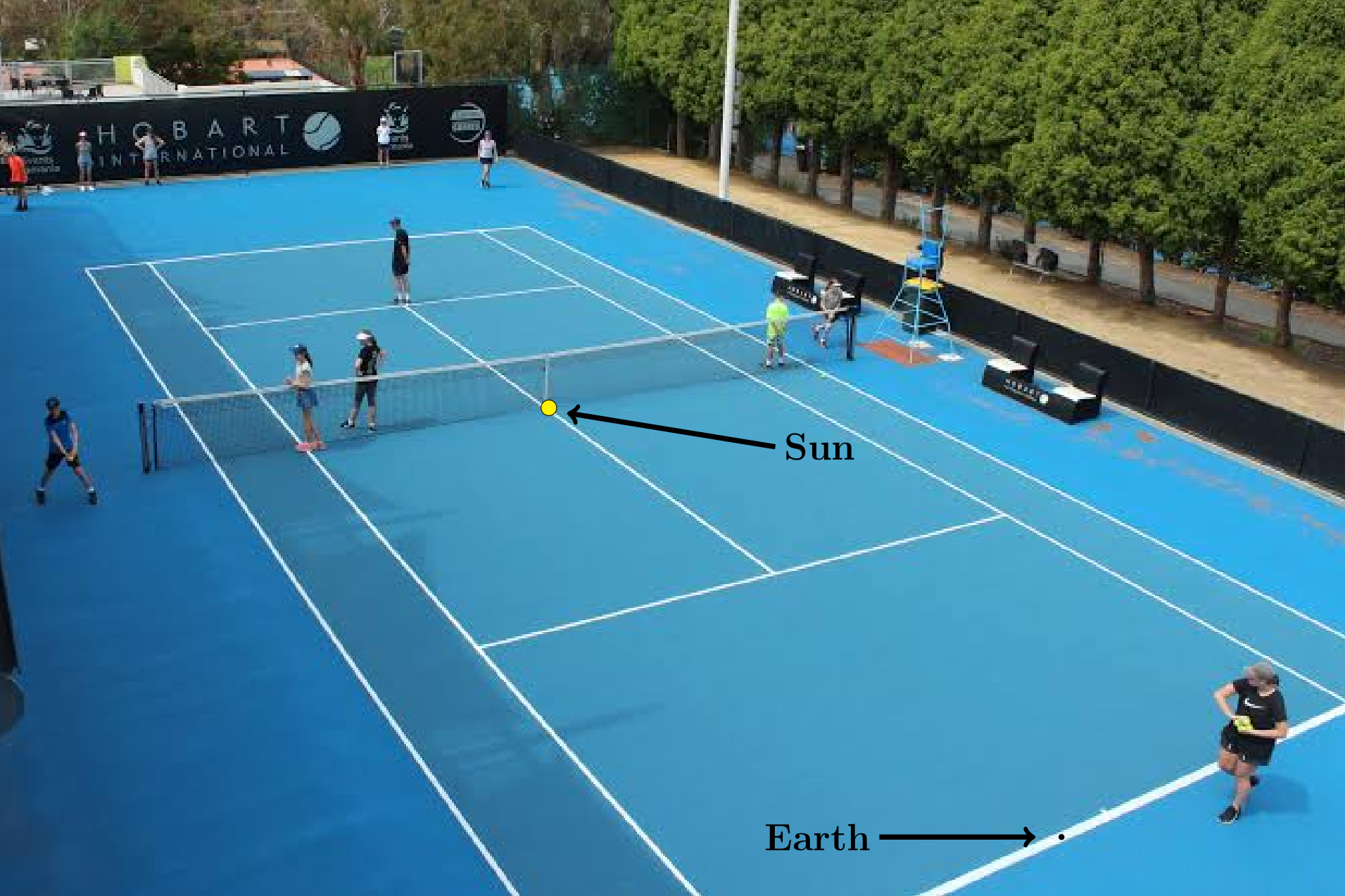

The size of the universe

As a first blog post for 2020, I'm dusting off one from my previous blog, which I've edited only slightly.

I've been looking up at the sky at night recently, and thinking about the sizes of things. Now it's all very well to say something is for example a million kilometres away; that's just a number, and as far as the real numbers go, a pretty small one (all finite numbers are "small"). The difficulty comes in trying to marry very large distances and times with our own human scale. I suppose if you're a cosmologist or astrophysicist this is trivial, but for the rest of us it's pretty daunting.

It's all a problem of scale. You can say the sun has an average distance of 149.6 million kilometres from earth (roughly 93 million miles), but how big, really, is that? I don't have any sense of how big such a distance is: my own sense of scale goes down to about 1mm in one direction, and up to about 1000km in the other. This is hopelessly inadequate for cosmological measurements.

So let's start with some numbers:

-

Diameter of Earth: 12,742 km

-

Diameter of the moon: 3,475km

-

Diameter of Sun: 1,391,684 km

-

Diameter of Jupiter: 139,822 km

-

Average distance of Earth to the sun: 149,597,870km

-

Average distance of Jupiter to the sun: 778.5 million km

-

Average distance of Earth to the moon: 384,400 km

Of course since all orbits are elliptical, distances will both exceed and be less than the average at different times. However, for our purposes of scale, an average is quite sufficient.

By doing a bit of division, we find that the moon is about 0.27 the width of the earth, Jupiter is about 11 times bigger (in linear measurements) and the Sun about 109.2 times bigger than the Earth.

Now for some scaling. We will scale the earth down to the size of a mustard seed, which is about 1mm in diameter. On this scale, the Sun is about the size of a large grapefruit (which happily is large, round, and yellow), and the moon is about the size of a dust mite:

On this new scale, with 12742 km equals 1 millimetre, the above distances become:

-

Diameter of Earth: 1mm

-

Diameter of the moon: 0.27mm

-

Diameter of Sun: 109.2m

-

Diameter of Jupiter: 10.97mm

-

Average distance of Earth to the sun: 11740mm = 11.74m

-

Average distance of Jupiter to the sun: 61097.2mm = 61.1m

-

Average distance of Earth to the moon: 30.2mm = 3cm

So how long is the distance from the sun to the Earth? Well, a cricket pitch is 22 yards long, so 11 yards from centre to end, which is about 10.1 metres. So imagine our grapefruit placed at the centre of a cricket pitch. Go to an end of the pitch, and about 1.5 metres (about 5 feet) beyond. Place the mustard seed there. What you now have is a scale model of the sun and earth.

Here's a cricket pitch to give you an idea of its size:

Note that in this picture, the yellow circle is not drawn to size. If you look just left of centre, you'll see the cricket ball, which has a diameter of about 73mm. Our "Sun" grapefruit should be about half again as wide as that.

If you don't have a sense of a cricket pitch (even with this picture), consider instead a tennis court: the distance from the net to the baseline is 39 feet, or 11.9m. At our scale, this is nearly exact:

(Note that on this scale the sun is somewhat bigger than a tennis ball, and the Earth would in fact be too small to see on this picture.)

So we now have a scale model of the Sun and Earth. If we wanted to include the Moon, start with its average distance from Earth (384,400 km), then we'd have a dust mite circling our mustard seed at a distance of 3cm.

How about Jupiter? Well, we noted before that it is about 61m away. Continuing with our cricket pitch analogy, imagine three pitches laid end to end, which is 66 yards, or 60.35 metres. Not too far off, really! So place the grapefruit at the end of the first pitch, the mustard seed a little away from centre, and at the end of the third pitch place an 11mm ball for Jupiter: a glass marble will do nicely for this.

And the size of the solar system? Assuming the edge is given by the heliopause (where the Sun's solar wind is slowed down by interstellar particles); this is at a distance of about 18,100,000,000 km from the Sun, which in our scale is about 1.42 km, or a bit less than a mile (0.88 miles). Get that? With Earth the size of a mustard seed, the edge of the solar system is nearly a mile away!

Onwards and outwards

So with this scaling we have got the solar system down to a reasonably manageable size. If 149,600,000 km seems too vast a distance to make much sense of, scaling it down to 11.7 metres is a lot easier. But let's get cosmological here, and start with a light year, which is 9,460,730,472,580,800 m, or more simply (and inexactly) 9.46× 1015m. In our scale, that becomes 742,483,948.562 mm, or about 742 km, which is about 461 miles. That's about the distance from New York city to Greensboro, NC, or from Melbourne to Sydney. The nearest star is Proxima Centauri, which is 4.3 light years away: at our Earth=mustard seed scale, that's about 3192.6 km, or 1983.8 miles. This is the flight distance from Los Angeles to Detroit. Look at that distance on an atlas, imagine our planet home mustard seed at one place and consider getting to the other.

The furthest any human has been from the mustard seed Earth is to the dust-mite Moon: 3cm, or 1.2 inches away. To get to the nearest star is, well, a lot further!

The nearest galaxy to the Milky Way is about 0.025 mly away. ("mly" = "millions of light years"). Now we're getting into the big stuff. At our scale, this distance will be 18,500,000 kilometres, which means that at our mustard seed scale, the nearest galaxy is about 18.5 million kilometres away. And there are lots of other galaxies, and much further away than this. For example, the Andromeda Galaxy is 2,538,000 light years away, which at our scale is 1,884,465,000 km -- nearly two billion kilometres!

What's remarkable is that even scaling the Earth down to a tiny mustard seed speck, we are still up against distances too vast for human scale. We could try scaling the Earth down to a ball whose diameter is the thickness of the finest human hair -- about 0.01 mm -- which is the smallest distance within reach of our own scale. But even at this scale distances are only reduced by a factor of 100, so the nearest galaxy is still 18,844,650 km away.

One last try: suppose we scale the entire Solar System, out to the heliopause, down to a mustard seed. This means that the diameter of the heliopause: 36,200,000,000 km, is scaled down to 1mm. Note that the heliopause is about three times further away from the sun than the mean distance of Pluto. At this scale, one light year is a happily manageable 261mm, or about ten and a quarter inches. So the nearest star is 1.12m away, or about 44 inches. And the nearest galaxy? Well, it's 25000 light years away, which puts it at about 6.5 km. The Andromeda Galaxy is somewhat over 663 km away. The furthest galaxy, with the enticing name of GN-z11 is said to be about 34 billion light years away. On our heliopause=mustard seed scale, that's about 9.1 million kilometres.

There's no escaping it, the Universe is big, and the scales need to describe it, no matter how you approach them, quickly leap out of of our own human scale.

Permanents and Ryser's algorithm

As I discussed in my last blog post, the permanent of an \(n\times n\) matrix \(M=m_{ij}\) is defined as \[ \text{per}(M)=\sum_{\sigma\in S_n}\prod_{i=1}^nm_{i,\sigma(i)} \] where the sum is taken over all permutations of the \(n\) numbers \(1,2,\ldots,n\). It differs from the better known determinant in having no sign changes. For example: \[ \text{per} \begin{bmatrix}a&b&c\\d&e&f\\g&h&i\end{bmatrix} =aei+afh+bfg+bdi+cdi+ceg. \] By comparison, here is the determinant: \[ \text{det} \begin{bmatrix}a&b&c\\d&e&f\\g&h&i\end{bmatrix} =aei - afh + bfg - bdi + cdi - ceg. \] The apparent simplicity of the permanent definition hides the fact that there is no known sub-exponential algorithm to compute it, nor does it satisfy most of the nice properties of determinants. For example, we have

\[ \text{det}(AB)=\text{det}(A)\text{det}(B) \]

but in general \(\text{per}(AB)\ne\text{per}(A)\text{per}(B)\). Nor is the permanent zero if two rows are equal, or if any subset of rows is linearly dependent.

Applying the definition and summing over all the permutations is prohibitively slow; of \(O(n!n)\) complexity, and unusable except for very small matrices.

In the small but excellent textbook "Combinatorial Mathematics" by Herbert J. Ryser and published in 1963, one chapter is devoted to the inclusion-exclusion principle, of which the computation of permanents is given as an example. The permanent may be considered as a sum of products, where in each product we choose one value from each row and one value from each column.